Mask Scoring R-CNN

Summary

The instance segmentation task pursues the accurate classification on pixel-level in object detection. It requires more detailed labels and more delicate network structures. However, the exiting instance mask methods, such as Mask R-CNN and MaskLab, simply regard the box-level classification confidence predicted by a classifier applied on the proposal feature as the score of the instance mask. It is improper and inaccurate to use the classification scores of proposals to measure the mask quality, which may cause the mask incompleteness and a decrease of AP if not properly scored. That means it is possible to get high box confidence score but low mask quality. To tackle the problem of the misalignment between classification confidence and mask quality, the authors put forward a novel and effective model named Mask Scoring R-CNN to learn to score the mask.

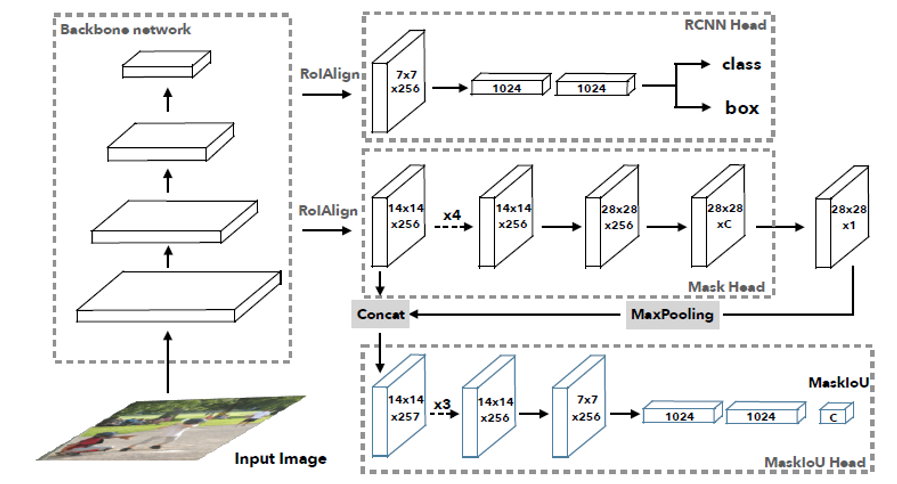

The mask scoring strategy is inspired by the AP metric to use pixel-level Intersection-over-Union between predicted mask and its ground truth mask, denoted by Mask IoU. Mask Scoring R-CNN’s implementation is conceptually simple: Mask R-CNN with a MaskIoU prediction network named MaskIoU Head, taking both the output of the mask head and RoI align feature as input and trained using a simple regression loss on MaskIoU. And Following Mask R-CNN, Mask Scoring R-CNN includes two stages: the first is the Region Proposal Network (RPN) that proposes candidate object bounding boxes regardless of object categories and the second is the R-CNN which extracts features using RoIAlign for each proposal and finishes multi-tasks: proposal classification,bounding boxes regression and mask predicting. The difference is that selecting top-k boxes by SoftNMS from R-CNN head is to fed into the Mask head to generate multi-class tasks.

The innovation of this model is that it designs a concise and effective mask scoring mechanism. The authors considered that the final mask score (defined as )should reflect two tasks: the mask classification and the MaskIoU regression. Therefore,the mask score learning task is decomposed into two parts, denoted as . Thebox classification in R-CNN head predicts the  and the MaskIoU head predicts the

The MaskIoU head consists of 4 convolution layers and 3 fully connected layers.Aiming to regress the MaskIoU, the concatenation of feature from RoI Align layers and the single predicted mask layer using max pooling layer to reduce the size by half as the input of MaskIoU head. And the output of final full connected layer will predict the score of C classes MaskIoU. For training MaskIoU head, the author adoptsL2 loss for regressing between predicted MaskIoU and the matched MaskIoU target. The whole network is end to end trained (Fig. 1).

Fig.1: Mask Scoring Network Structure Overview.

The authors conducted extensive experiments on the COCO dataset with 80 object categories,and used COCO evaluation metrics AP to report the results. The experiment’s results on different backbone networks including ResNet-18/50/101 and the same framework (FPN) demonstrates that adding MaskIoU head is able to bring noticeable and stable AP improvements in instance segmentation and the method is insensitive to different backbone networks.Furthermore, the authors reported the results on the same backbone (ResNet-101) and different frameworks including Faster R-CNN, FPN, DCN+FPN, proving that the proposed MaskIoU head brings consistent improvement.

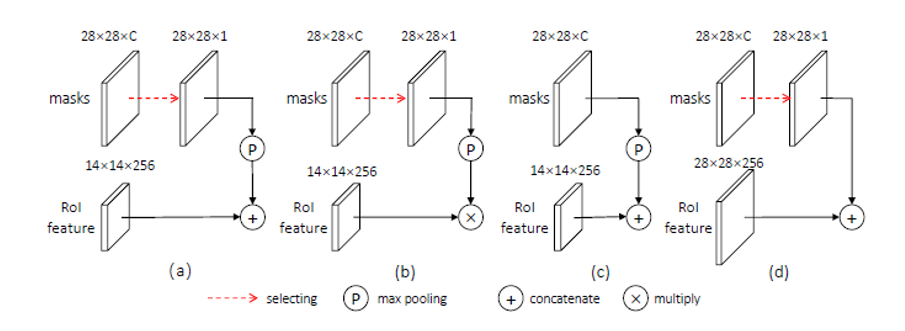

To evaluate the MaskIoU method comprehensively, the authors used ResNet-18 FPN forall the ablation study experiments. First, the study on different design choices of MaskIoU head input (Fig .2) shows that the best plan is concatenating the target score map and RoI feature obtains the best results,so it is used as the default choice. Besides, the authors also explored the choices of the training target and found that the strategy of learning the MaskIoU of the target category gets the best performance.

Finally, they discussed how to selecttraining samples and the results show thattraining using all examples without the box-level IoU threshold filterobtains the best performance.

Fig.2 Different design choices of the MaskIoU head input.

For further discussion, the experiments show MaskIoU predictions have good correlation with the ground truth.And the upper bound performance of the model is discussed and it outperforms Mask R-CNN consistently, which gets about 2% MaskIoU AP increase and still have a room to improve. In addition, the MaskIoU head has a small 0.39G FLOPs model size for every proposal. Even better, the computation cost of MaskIoU is negligible.

The MaskIoU head, proposed in this paper, effectively achieves the alignment of mask scores with MaskIoU and surpasses the state-of-the-art methods. It also can easily be applied to other instance segmentation networks and is a new direction to improve instance segmentation. Nonetheless,MS R-CNN is still a lot of room to explore and extend. Find a more effective loss function to help to improve the performance of small objects segmentation is a noteworthy direction. Besides, how to reduce the network branch or make network parallel based on the MaskIoU regression is worth considering for the tradeoff between precision and speed.